As data and AI move to the center of enterprise value creation, legacy systems aren’t just slowing data teams—they’re blocking AI at scale. Still relying on Hadoop? The clock is ticking on your data + AI potential. Migration to Databricks is the one imperative that enables your enterprise to operationalize AI, accelerate innovation, and unlock real-time intelligence.

For over a decade, Hadoop provided a viable framework for distributed storage and compute at scale. But for today’s AI-native organizations, its architecture has become a bottleneck. Rigid schema enforcement, batch-centric processing, tightly coupled storage-compute, and escalating ops overhead have made it increasingly infeasible to sustain innovation velocity.

Hadoop’s inherent limitations—manual tuning, poor elasticity, lack of built-in ML tooling, and costly maintenance cycles—are now amplified in environments where operational SLAs are measured in minutes, not hours. The delta between what business teams require and what Hadoop platforms can deliver has widened into a systemic misalignment between infrastructure and insight.

Across industry verticals, platform teams are migrating Hadoop workloads to Lakehouse architectures—specifically, the Databricks Lakehouse Platform—not just to cut cost but to re-architect for elasticity, interoperability, and AI scalability.

This blog outlines the hidden value your organization can capture by migrating to Databricks—switching from a legacy burden into a growth catalyst.

Hidden Costs of Staying on Hadoop

The most visible rationale for Hadoop migration—licensing costs—barely scratch the surface. The real costs are embedded across operations. The case for migrating to Databricks is driven by four core strategic considerations:

- Infrastructure: Eliminate architectural bottlenecks by decoupling storage and compute, enabling elastic scale, workload isolation, and AI-native performance.

- Cost of Ownership: Reduce infrastructure spend (sourcing, powering, and managing) and increase sales and performance.

- Productivity: Increase productivity and collaboration among data scientists and data engineers by eliminating manual tasks.

- Business impacting use cases: Accelerate and expand the realization of value from business-oriented use cases.

1. Infrastructure:

At its core, the Databricks Lakehouse is not a cloud-hosted replica of Hadoop—it is an architectural reset. The design principles are clear:

- Separation of storage and compute using Delta Lake on cloud object storage (e.g., ADLS Gen2) enables dynamic autoscaling, workload isolation, and lower TCO.

- ACID-compliant Delta tables allow seamless support for both batch and streaming ingestion, with time travel, upserts, and schema evolution as first-class primitives.

- Native support for ML and real-time analytics eliminates brittle integrations across disparate stacks.

- Governance-as-code via Unity Catalog provides a policy-enforced metadata plane—centralized, lineage-aware, and fully audit-ready from ingestion to activation.

This is not a lift-and-shift model. It’s a decoupled, unified data and ML architecture designed for governed collaboration and operational intelligence.

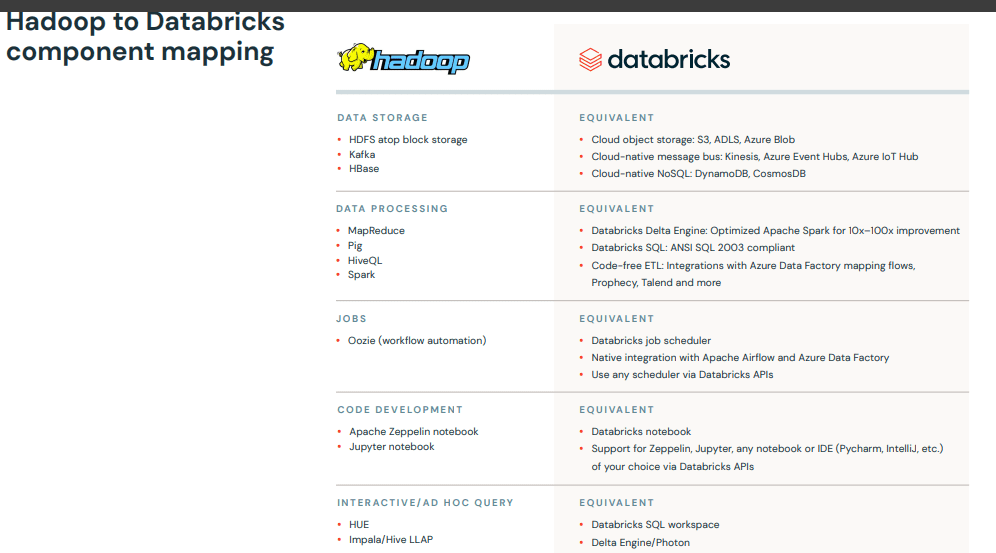

Exhibit 1: Hadoop to Databricks component map

2. Cost of Ownership:

As more companies migrated to modern cloud data and AI platforms, Hadoop providers have raised licensing costs to make up for their losses, which is only accelerating the migration. Organizations tend to focus on the comparative costs of licensing, and Hadoop’s subscription fees alone make a compelling case to migrate. The deeper truth is platform migrations are less about feature parity and more about securing the strategic foundation for long-term value creation. To get a true sense of what Hadoop is costing your organization, you have to step back.

From a benchmark of 10 Databricks customers, it was found that licensing accounts for less than 15% of the total cost — it’s the tip of the iceberg. The other costs are made up of the following:

- Data center overhead: Power, cooling, and real estate can consume up to 50% of total spend for a 100-node cluster. At $800 per server per year, that’s $80K/year in electricity alone.

- Hardware and upgrades: Tightly coupled storage and compute architectures compel enterprises to adopt asymmetric scaling of compute resources.

- Cluster administration: A typical 100-node cluster requires 4–8 FTEs just to maintain SLAs and manage versions, not to mention the productivity cost of slow, brittle pipelines.

CAPEX vs. OPEX: Pay Only for What Is Used

Databricks is priced based on consumption — you only pay for what you use. But Databricks is a more economical solution in other ways too:

- Autoscaling ensures customers only pay for the infrastructure they use

- With a cloud-based platform, capacity can scale to meet changing demand instantly, not in days, weeks, or months.

- Storage and compute are kept separate, so adding more storage does not require adding expensive compute resources at the same time.

- With Databricks, organizations can tailor performance to purpose—leveraging GPUs for high-demand workloads while minimizing cost on lower-priority operations.

- Expensive data center management and hardware costs disappear entirely.

3. Raising Productivity

From a platform engineering perspective, Databricks eliminates the redundant glue code, handoffs, and orchestration complexity typical of Hadoop-based stacks. Through a unified development experience across SQL, Python, Scala, and R—backed by interactive notebooks and version-controlled jobs—teams converge around a single interface.

Key productivity enablers:

- Delta Live Tables for declarative pipeline management with auto lineage tracking

- Native support for structured streaming and change data capture (CDC)

- Integrated MLflow for experiment tracking, model versioning, and deployment

- BI connector support for tools like Power BI, Tableau, and Looker—no extract-and-load friction

This unified ecosystem drives 10x iteration speed for many data teams, especially in organizations migrating from custom Spark-on-YARN or Hive-on-HDFS pipelines.

4. Business-Impacting Use Cases

With Databricks, customers are able to move beyond the limitations of Hadoop and finally address business-critical use cases. These organizations find that the value unlocked by a modern cloud-native data and AI platform far exceeds the cost of migration—driven by its ability to support more advanced use cases, at greater scale, and at significantly lower cost.

- Real-time fraud detection via DNS or transaction logs

Real-time fraud detection has shifted from reactive forensic analysis to continuous prevention, enabled by real-time telemetry from DNS and transactional logs. Databricks’ ability to process and score threats dynamically gives security teams the lead time to contain breaches before they escalate, reducing both financial loss and reputational risk.

- Customer churn and CLV models operationalized with streaming telemetry

Customer churn and lifetime value modeling have also evolved. Rather than relying on monthly refreshes of static dashboards, organizations can now operationalize streaming inputs—usage patterns, support interactions, product telemetry—to proactively identify at-risk segments and optimize retention interventions. Marketing and finance functions gain a shared view of the customer that enables precision across both budget allocation and forecast planning.

- ESG compliance and sustainability analytics through geospatial joins at scale

In ESG compliance and sustainability reporting, enterprises leverage Databricks to integrate real-time geospatial feeds with regulatory logic. This allows organizations to not only track their carbon and environmental footprint more effectively but to model alternative scenarios and improve operational sustainability strategies in-flight.

- Clinical outcome forecasting on multimodal datasets with GPU acceleration

For healthcare and life sciences, Databricks enables clinical outcome forecasting using multimodal data integration. Structured EHR data is joined with imaging diagnostics and genomic sequences, and processed in parallel using GPU acceleration. The result is faster risk stratification, more personalized treatment recommendations, and lower latency between clinical events and insight.

- Ad spend attribution and multi-touch marketing pipelines over terabyte-scale events

In digital advertising and brand management, the platform’s ability to support petabyte-scale processing allows marketing teams to move beyond post-campaign reports. Real-time attribution, budget optimization, and audience segmentation now happen continuously, based on actual engagement streams across channels. The implication: greater ROI per campaign cycle and more agile go-to-market execution.

Architecting the Exit: Don’t Recreate Hadoop in the Cloud

Hadoop workloads are rarely clean. Over time, they evolve into fragmented layers of ETL pipelines, interdependent Hive jobs, and fragile orchestration scripts. The result is deeply entangled systems with low observability, undocumented logic, and high change risk. For platform leaders, this creates a dilemma: how to migrate without replicating technical debt—or triggering regression in business-critical workflows.

Modak brings execution certainty to Hadoop-to-Databricks migrations—combining automation, architectural rigor, and enterprise alignment.

Through an MDP that enables enterprises to automate data ingestion, profiling and curation at petabyte scale—Nabu—and a KPI-aligned delivery model, Modak enables enterprises to execute large-scale Hadoop-to-Databricks migrations with architectural discipline, embedded governance, and reduced time-to-value.

- Automated Discovery and Lineage Mapping

- Nabu data crawlers connect to the source data—boosting bulk ingestion pipelines ~95%.

- Dynamically infers job dependencies via DAG construction.

- Tags workloads by business impact to prioritize high-value refactoring, not just high-volume workloads.

- Produces a complete modernization blueprint—including lineage metadata—in days, ready for audit and governance.

2. Production-Ready Spark Pipelines—Generated, Not Rewritten

- Converts legacy ETL into Spark-native pipelines optimized for Databricks Lakehouse architecture.

- Delivers:

- Partition-aware transformations and adaptive execution plans

- Native Delta Lake integration with ACID-compliant writes for open table formats

- Git-based CI/CD scaffolding for DevSecOps integration

- Customers retain full code ownership, with the option for Modak to manage operations post-migration.

3. Embedded Cost Controls and Enterprise-Grade Observability

- All jobs instrumented for monitoring—logs routed to Datadog, Grafana, or cloud-native tools.

- Autoscaling, spot instances, and cluster pooling enabled by default, yielding up to 35% compute savings.

- No governance retrofit required—Unity Catalog embeds fine-grained policy enforcement and end-to-end data lineage as foundational capabilities from the outset.

Exhibit 2: Value impact of direct migration

Closing the Gap Between Ambition and Architecture

For infrastructure leaders, this transition is more than platform modernization—it’s the creation of a scalable, collaborative, and governed foundation for enterprise-wide data activation. The Lakehouse isn’t just a Hadoop successor. It’s the convergence point of performance, trust, and AI readiness.

For every platform team weighed down by infrastructure complexity and unmet SLAs, the message is clear: Hadoop served its purpose. But in a cloud-native, AI-led landscape, it’s time to architect what comes next.

Run a no-risk discovery engagement with Modak and receive a blueprint that quantifies technical feasibility and business value to unlock your AI advantage.